I am a PhD candidate at HKU, supervised by Lingpeng Kong.

My current research interests including diffusion language models and long context language models. I’m trying to explore different kinds of generation paradigms for better controllability and reasoning capacity.

I graduated from Shanghai Jiao Tong University (SJTU), supervised by Kenny Zhu. I used to work at pose estimation, face recognition, hierarchical text classification and recommendation systems. ➡️ My CV (update in Jan 2026)

“I can only show you the door, you’re the one that has to walk through it” – Morpheus (The Matrix)

📚 Selected Publications

* indicates equal contribution.

This page highlights my work on diffusion language models. For a complete list of publications, please refer to my CV.

Large Scale Diffusion LMs

Dream-VL & Dream-VLA: Open Vision-Language and Vision-Language-Action Models with Diffusion Language Model Backbone (technical report)

Jiacheng Ye*, Shansan Gong*, Jiahui Gao, Junming Fan, Shuang Wu, Wei Bi, Haoli Bai, Lifeng Shang, Lingpeng Kong

The open VL and VLA models that fully unlock discrete diffusion’s advantages in long-horizon planning and parallel action generation for multimodal tasks.

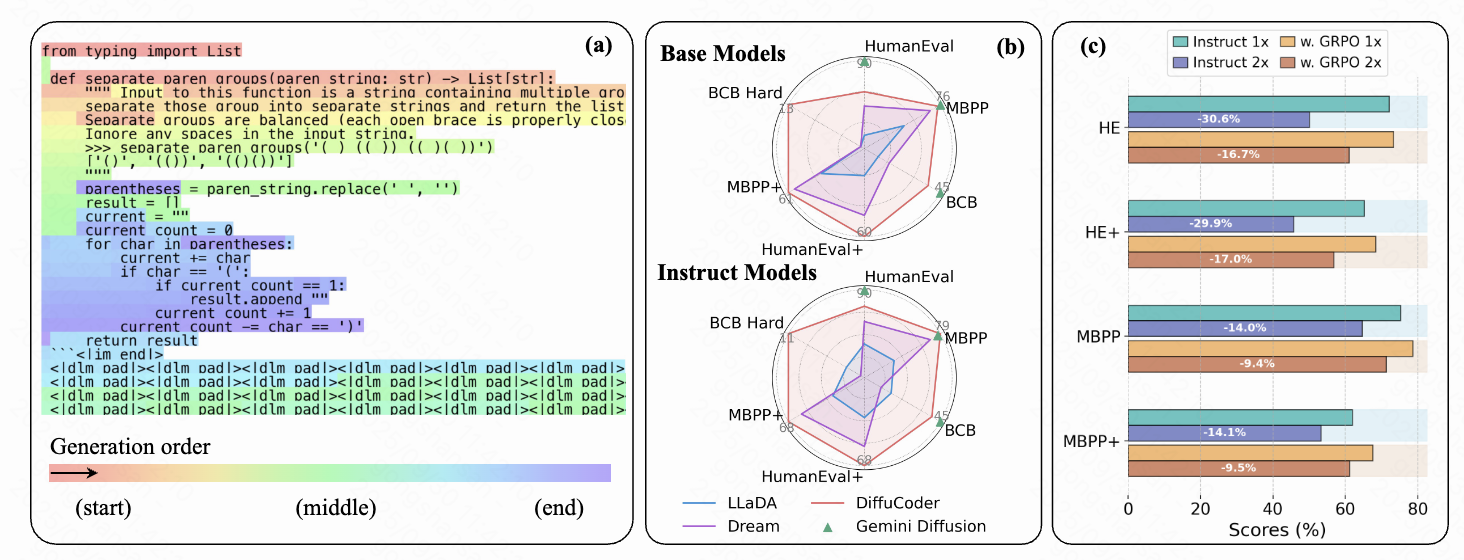

DreamOn: Diffusion Language Models For Code Infilling Beyond Fixed-size Canvas (ICLR 2026)

Zirui Wu, Lin Zheng, Zhihui Xie, Jiacheng Ye, Jiahui Gao, Shansan Gong, Yansong Feng, Zhenguo Li, Wei Bi, Guorui Zhou, Lingpeng Kong

A novel diffusion framework that enables dynamic, variable-length generation.

DiffuCoder: Understanding and Improving Masked Diffusion Models for Code Generation (ICLR 2026)

Shansan Gong, Ruixiang Zhang, Huangjie Zheng, Jiatao Gu, Navdeep Jaitly, Lingpeng Kong, Yizhe Zhang

DiffuCoder

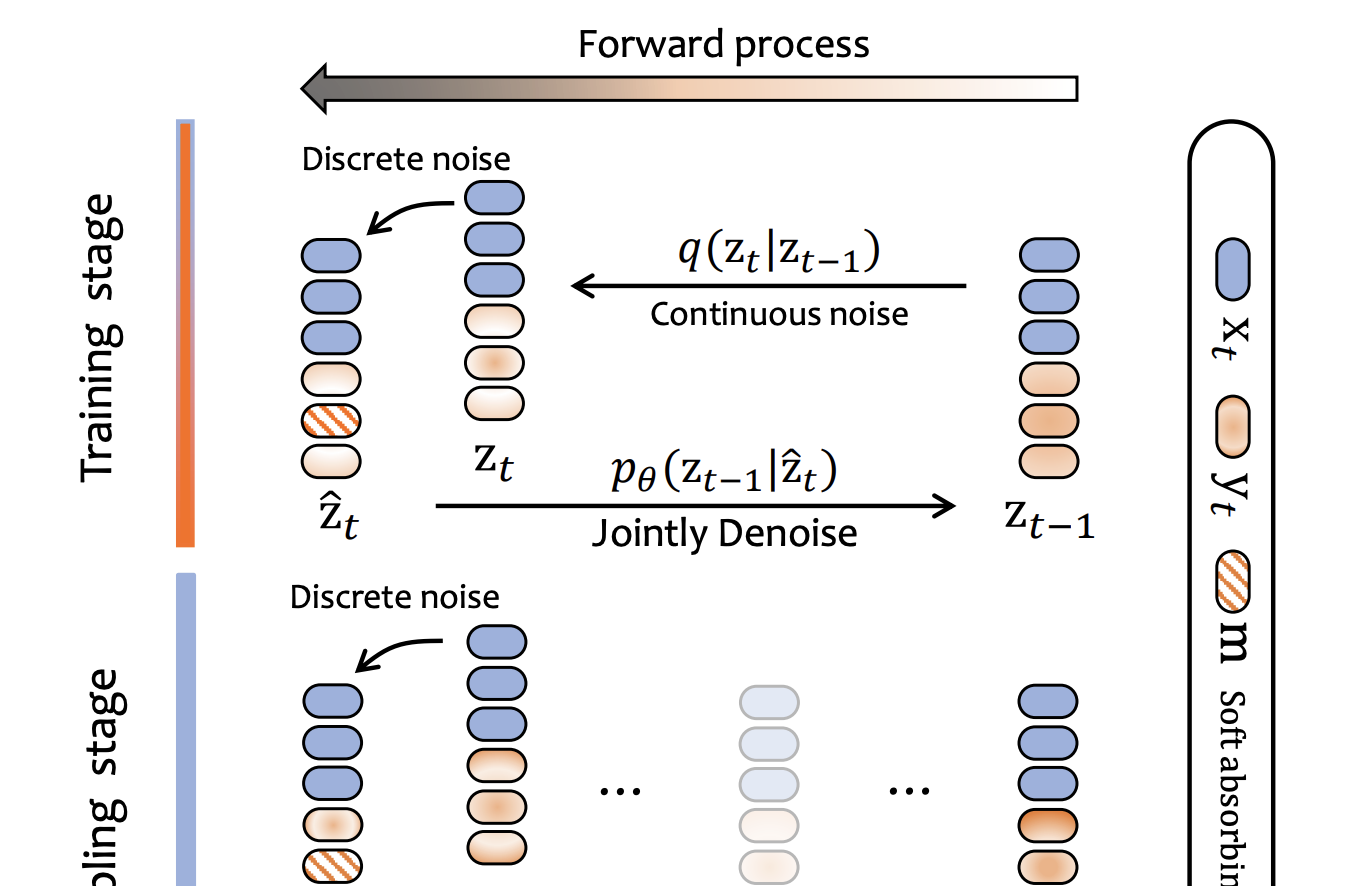

Continuously Augmented Discrete Diffusion model for Categorical Generative Modeling (ICLR 2026)

Huangjie Zheng, Shansan Gong, Ruixiang Zhang, Tianrong Chen, Jiatao Gu, Mingyuan Zhou, Navdeep Jaitly, Yizhe Zhang

We propose CADD, a framework that augments the discrete state space with a paired diffusion in a continuous latent space.

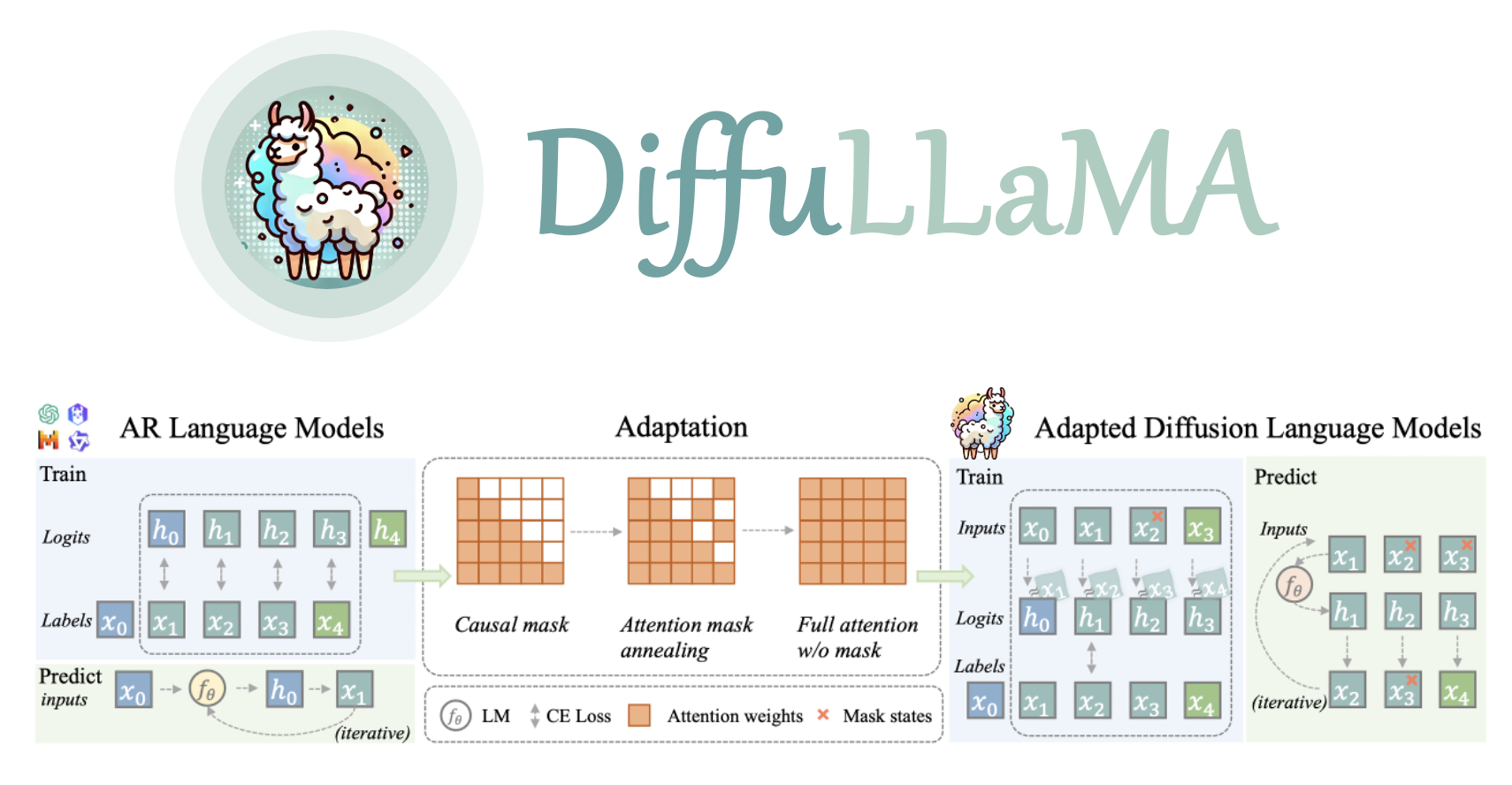

Scaling Diffusion Language Models via Adaptation from Autoregressive Models (ICLR 2025)

Shansan Gong*, Shivam Agarwal*, Yizhe Zhang, Jiacheng Ye, Lin Zheng, Mukai Li, Chenxin An, Peilin Zhao, Wei Bi, Jiawei Han, Hao Peng, Lingpeng Kong

DiffuLLaMA

Initial Exploration for Text Diffusion

Beyond Autoregression: Discrete Diffusion for Complex Reasoning and Planning (ICLR 2025)

Jiacheng Ye, Jiahui Gao, Shansan Gong, Lin Zheng, Xin Jiang, Zhenguo Li, Lingpeng Kong

Code

Diffusion of Thoughts: Chain-of-Thought Reasoning in Diffusion Language Models (NeurIPS 2024)

Jiacheng Ye*, Shansan Gong*, Liheng Chen*, Lin Zheng, Jiahui Gao, Han Shi, Chuan Wu, Zhenguo Li, Wei Bi, Lingpeng Kong

DoT

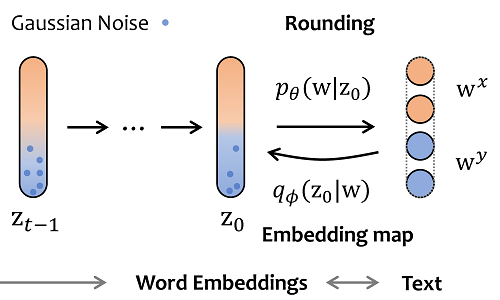

DiffuSeq-v2: Bridging Discrete and Continuous Text Spaces for Accelerated Seq2Seq Diffusion Models

Shansan Gong, Mukai Li, Jiangtao Feng, Zhiyong Wu, Lingpeng Kong

Code| Accelerated version of DiffuSeq, where the discrete noise bridges the training and sampling stages, saving time consumption of these two stages.

DiffuSeq: Sequence to Sequence Text Generation With Diffusion Models

Shansan Gong, Mukai Li, Jiangtao Feng, Zhiyong Wu, Lingpeng Kong

DiffuSeq

🎊 Honors and Awards

- 2024 ACL, Outstanding Paper

- 2024 Tencent Rhino-bird Research Elite Program, Outstanding Student

- 2022 SIGIR Student Travel Award

- 2022 Outstanding Graduate in Shanghai Municipality

- 2019 Outstanding Undergraduate in SJTU

💬 Invited Talks

- 2025.12, “DiffuCoder,” Discrete Diffusion Reading Group (d-llms.com).

- 2025.11, “Diffusion Language Models: From Fundamentals to Better Coding with RL,” Jia Li’s Group, Tsinghua University.

- 2025.06, “Introducing Diffusion Large Language Models (dLLMs): DiffuLLaMA and DiffuCoder,” Google Efficiency Research.

- 2025.06, “The Journey of Text Diffusion Models,” Noah’s Group, UW NLP.

- 2023.06, “DiffuSeq,” Youth PhD Talk ICLR 2023 AI Time.

- 2023.05, “Incorporate Diffusion Models into Conditional Text Generation,” Global Lunch Seminar, SJTU CS Department.

📖 Educations

- 2019.06 - 2022.03, Master, Computer Science, SEIEE, Shanghai Jiao Tong University.

- 2015.09 - 2019.06, Undergraduate, Information Engineering, SEIEE, Shanghai Jiao Tong University.

💻 Internship

- 2025.01 - 2025.08, Research Intern, Diffusion Text Generation, Apple MLR

, Seattle.

- 2023.11 - 2024.10, Research Intern, Diffusion Text Generation, Tencent AI Lab

, Shenzhen.

- 2021.12 - 2022.03, RE Intern, Product Categorization, Meituan

, Shanghai.

- 2021.06 - 2021.10, SDE Intern, Bing Search Optimization, Microsoft STCA

, Beijing.

- 2019.12 - 2022.03, CTO, iWenBooks APP Development, Yousheng Tech Inc

, Shanghai.

📌 Services

- Conference Reviewer: COLING2022, ACL2023, NeurIPS2023-, EMNLP2023, ICLR2024-, ARR2024-

- Journal Reviewer: ACM Computing Surveys, IEEE Journals, TPAMI

- TA at HKU: COMP2121 (Discrete math), COMP7104 (Advanced database systems)

- One of the hosts of HKU Seminar

All those moments will be lost in time, like tears in rain. – Blade Runner